DuckSoup

10 April 2023, Pablo Arias Sarah

Introduction

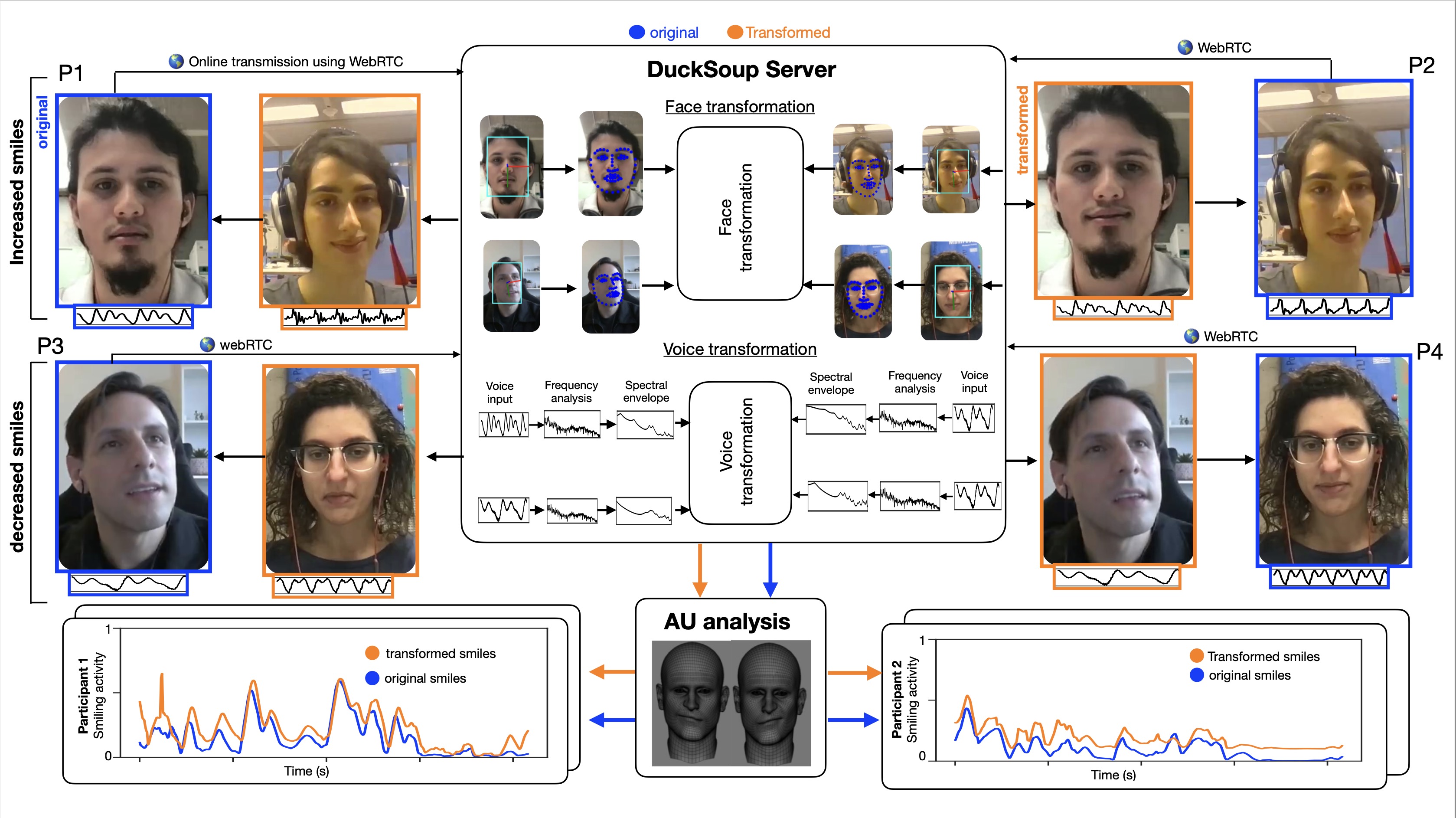

It is remarkably difficult for social cognition research to study interactive contexts. Current experimental paradigms are either limited to correlational findings or by low ecological validity. To overcome such experimental limitations we created the experimental platform DuckSoup. DuckSoup is an open-source videoconference platform where researchers can manipulate participants' facial and vocal signals in real time using voice and face processing algorithms (e.g., artificially increase the smiles of one participant, or enhance their voical intonations). DuckSoups opens the door to covertly control social signals in free and unscripted social interactions. We hope this methodology will help us improve the ecological validity, experimental control, and data collection scalability of social interaction research, while allowing researchers to study the causal effects of social signals during social interactions.

Status

DuckSoup is functional but still under development. We are collecting the first datasets with it at the moment and testing all functionalities. If you are interested in using it, the easiest way is to get in touch! Anyway, the open source code can be found here. Feel free to have a look!

What is it?

DuckSoup is a Go application that was built to run server side and communicate with several participants (clients). DuckSoup uses the gstreamer C library to transform audiovisual inputs, and the WebRTC pion library to communicate with regular browsers. During the experiments, DuckSoup (1) receives audiovisual streams from N participants through WebRTC (2) decodes them in parallel using Graphic processing units, (3) transforms them using predefined video/audio processing pipelines, (4) encodes them and (5) retransmits them with WebRTC. DuckSoup can be embedded on any webpage. Video/audio inputs/outputs can be routed as needed by the experimenter. We are sharing DuckSoup as a free and open-source platform with an MIT license.

Dynamic voice and face manipulations.

Ducksoup can use any gstreamer plugin to manipulate video, audio, voice and face signals. Currently available and free plugins include e.g., vocal pitch shift, reverb, video blurring, mirror, etc. Moreover, we will be sharing a custom-made open-source face deformation plugin called Mozza that implements an ultra-low latency real-time smile manipulation algorithm based on 2D facial warping. All manipulations can be controled dynamically during the interactions. For instance, smiles can be dynamically triggered at specific times, to create time taged datasets organised with epoch. Check out an example of Ducksoup in Mirror Mode below where we dynamically control smile intensity!

Data collection.

With the suitable network configuration (e.g., open UDP ports) and computational power server side (e.g., 24 core CPU; A GPU able to encode/decode unlimited concurrent sessions), DuckSoup can handle dozens of participants interacting in parallel, while recording both manipulated and non-manipulated audiovisual streams. DuckSoup can be used in conjunction with the open-source platform oTree to orchestrate interactions while collecting behavioral responses during any kind of interactive setting such as cooperation games, job interviews, brainstorming sessions, etc.

Data Analysis.

Because DuckSoup records synchronised video datasets, this makes the datasets suitable for analyses using recent artificial intelligence voice/face analysis technology (e.g., face detection, landmark tracking, action unit extraction, movement extraction, facial muscle extraction, speech transcription, voice analysis, heart rate monitoring, etc).

References

We are working on a methodological preprint explaining DuckSoup in depth. It will be entitle "DuckSoup: An open-source experimental platform to covertly manipulate participants’ facial and vocal attributes during social interactions". We hope to release this soon.

Team

Pablo Arias Sarah is leading the project, the main developer is Guillaume Denis. We developed this with the help and inputs of Jean-Julien Aucouturier, Petter Joahnsson and Lars Hall.